FMP

From Bad Data to Better Decisions: Why Data Quality Defines Corporate Finance

Nov 24, 2025

Running a valuation screen shouldn't come to a halt because different external datasets report different TTM revenue for the same company. When financial inputs vary by definition, reporting period, or calculation method, analysts are forced to pause analysis, investigate the discrepancy, and rebuild confidence in the model. The slowdown isn't just an inconvenience — inconsistent external data creates measurable financial risk.

Across valuation, benchmarking, M&A screening, and forecasting, consistent external data is the foundation of reliable financial modeling. Standardized, governed data pipelines eliminate those discrepancies and give teams the consistency required for confident, defensible financial decisions.

The Measurable Cost of Inconsistent External Financial Data

When external financial data lacks standardization, the manual reconciliation required imposes tangible costs and introduces systemic risk across the investment lifecycle. The traditional, manual workflow imposes tangible costs across the organization, slowing everything from peer analysis to strategic modeling:

- Valuation Drift: Inconsistent financial metrics, particularly fundamental ratios like EV/EBITDA or P/E, introduce a data-driven risk premium that suppresses overall corporate valuation multiples or causes deal terms to misalign.

- Wasted Labor: Analysts are diverted from high-value tasks like scenario planning to validating discrepancies, such as why one vendor's historical revenue series has 15 years while another has only 10. PwC estimates that poor data quality causes unnecessary labor costs, often exceeding 15% of total analytics budgets.

- Forecast Error: Models built on data with inconsistent definitions (e.g., mixing fiscal year with calendar year figures) lead to unreliable forecasts and slow decision-making, missing key windows for asset allocation. This is the measurable cost of the risk premium of missing data.

How Poor External Data Compromises Benchmarking and Forecasting

Inaccurate inputs from third-party sources directly undermine a company's ability to benchmark performance and generate reliable forecasts. The problem is often rooted in varying metric definitions, reporting periods, and identifier mapping.

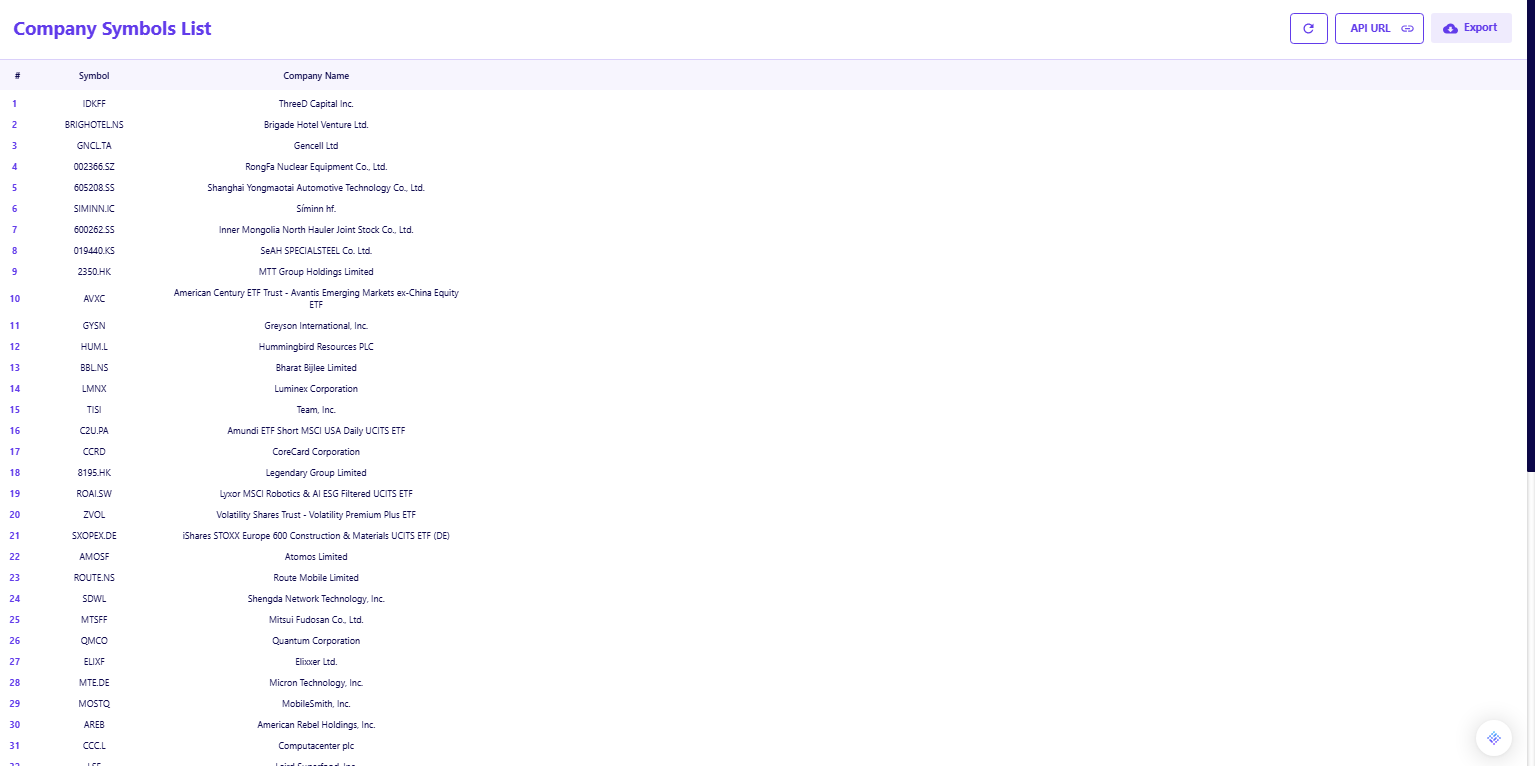

1. Benchmarking Failure: Comparing a target company's performance requires every security symbol to be correctly identified and mapped to a consistent database. When symbols conflict or are incorrectly linked to data sources, the entire peer group analysis fails. Using the FMP Company Symbols List API ensures every security is correctly identified across all internal models, providing a consistent symbol reference to eliminate identifier errors in peer comparisons.

2. Model Decay: Predictive models rely on long, consistent historical time series. If the underlying historical data used in the model is subject to period misalignment (e.g., mixing MRQ with TTM without clear tagging), the model's foundation is compromised.

Table: External Data Quality Errors and Their Strategic Impact

|

External Data Error Category |

Strategic Risk Example |

Root Cause |

Estimated Financial Impact |

Governance Control |

|

Revenue Definition Mismatch |

Comparing GAAP Net Revenue to non-GAAP Adjusted Revenue across competitors. |

Inconsistency in external vendor's financial statement standardization. |

Distorted peer group average; misjudged market share; valuation drift. |

Standardized statement delivery via FMP APIs. |

|

Symbol/Ticker Conflict |

Using the wrong ticker for a dual-listed stock (e.g., LSE vs. NYSE) in a global model. |

Lack of reliable, verified symbol mapping in the data ingestion pipeline. |

Querying irrelevant data; incorrect price feeds; portfolio risk. |

FMP Company Symbols List API verification. |

|

Missing Historical Periods |

Incomplete cash flow lines (e.g., missing 2005-2008 data) for a required model input. |

Data provider limitations or missing data points in the external time series. |

Fragile forecast erosion; inability to validate long-term growth hypotheses. |

Audit-ready pipelines with data lineage checks. |

|

Period Misalignment |

Mixing Fiscal Year (FY) data with Trailing Twelve Months (TTM) data in a single chart. |

Lack of standardized data tagging or inconsistent API calling practices. |

Incorrect trend analysis; flawed debt covenant reporting. |

FMP Key Metrics API for consistent TTM ratios. |

Building a Data Governance Framework for External Data

A comprehensive framework focused on external data is essential to transform the finance function from a consumer of risky data to a leader in Strategic Data Governance. This shift is about establishing rigorous controls for sourcing and consuming market intelligence.

Identifying the Root Causes of External Data Errors

Most external financial data quality issues stem from the lack of standardization inherent in the financial ecosystem, highlighting the need for controlled ingestion:

- Decentralized Definitions: Metrics like Adjusted EBITDA or Free Cash Flow are reported differently across regulatory jurisdictions and vendors.

- External Data Inconsistency: When pulling statements for valuation, using inconsistent report types (e.g., annual vs. TTM) or non-standardized formats leads to immediate reconciliation work.

To solve the external consistency problem, finance must demand structured access. Solving the Business Problem: Finance needs to quickly compare the performance of competitors or potential acquisitions using consistent, fully reported financial metrics without manual formatting. Using standardized statement delivery via the FMP Financial Statements APIs provides consistent, comparable income figures to accelerate competitive analysis. This eliminates the manual reconciliation caused by inconsistent formatting across sources. This is critical for how to get historical market data.

Turning Data Standardization into Competitive Advantage

Strategic Data Governance over external data is a measurable investment that reduces risk and elevates the quality of executive decisions. This advantage is built by ascending the ladder of data maturity:

Table: External Data Maturity Model

|

Maturity Stage |

Defining Characteristics |

External Data Issues |

Risk Exposure |

Financial Outcome |

|

Reactive |

Ad-hoc spreadsheets; manual copy/paste |

Frequent errors, inconsistent fields, manual ticker fixes |

High |

Unreliable forecasts; costly rework |

|

Managed |

Centralized storage; basic vendor feeds |

Latency; inconsistent definitions across vendors |

Moderate |

Slower allocation decisions |

|

Governed |

API-driven ingestion; verifiable lineage |

Standardized metrics; consistent identifiers |

Low |

Confident, defensible strategic decisions |

Institutionalizing Standardized Ratio Calculation

The most effective strategy to move from the 'Managed' to the 'Governed' stage is by adopting APIs as the mandated access point for pre-calculated financial metrics. This enforces standardization on the consuming end.

For example, when setting up an M&A screen, the finance team needs key performance indicators (KPIs) like Return on Invested Capital (ROIC) and Enterprise Value (EV) calculated consistently across hundreds of companies. This process requires access to standardized, pre-calculated performance ratios. Utilizing the FMP Key Metrics API provides consistent, TTM-based performance ratios to validate investment theses. This removes the risk of calculating ratios inconsistently across internal models and ensures all analysts use the same metric definitions.

Operational Steps for Data Governance Adoption

The path to a governed external data model begins with high-impact changes that establish the required controls over data ingestion and analysis.

- Standardize External Data Consumption: Commit to using API access for core external benchmarks (e.g., key financial ratios) to immediately establish a governed refresh cadence.

- Define and Map Identifiers: Mandate the use of the FMP Company Symbols List API for all security identifier lookups to ensure the correct symbol is used consistently across all models.

- Utilize No-Code Integration: Finance users can configure data ingestion directly into existing dashboards without coding. Establishing API integration only requires a few structured steps, not dedicated IT resources. Learn about five key steps to integrate FMP APIs without writing a single line of code.

- Establish Data Ownership: Form a cross-functional working group (Finance/Data Science/Risk) to ratify the single, authoritative source for key external data points and agree on which data set is audit-ready.

This deliberate, staged approach is how successful organizations build analytic maturity and mitigate the risk of inconsistent external data. These controls only work when the external dataset itself is standardized. FMP's structured endpoints — from identifier mapping to TTM ratios — ensure every model starts from the same clean foundation.

The Future of Strategic Financial Data Quality

The conversation around External Financial Data Quality must transition from an IT cleanup task to an executive planning session priority. By treating data governance as a strategic imperative, establishing controlled ingestion, enforcing metric consistency, and utilizing structured APIs for verifiable lineage finance teams can retire the risks associated with inconsistent external data. The result is a finance function that accelerates capital allocation, strengthens forecasting accuracy, and maintains confidence in every strategic decision.

Frequently Asked Questions (FAQs)

What is the fastest way for FP&A to adopt standardized external APIs?

The most efficient method is to automate one recurring manual data pull (like competitor valuation ratios) by connecting it directly to a standardized API endpoint, establishing a rapid proof of concept for reliability.

Is dedicated data engineer hiring essential for this transition?

No. The initial transition should focus on training existing analysts in modern data literacy and utilizing platforms that provide pre-governed data accessible via user-friendly APIs designed for non-coders.

What is the strategic distinction between data governance and security?

Data security governs who can access data (protection). Data Governance ensures the data's quality, consistency, and verifiable lineage (trust). Both are required for compliance assurance.

How do external data inconsistencies lead to "valuation drift"?

Valuation drift occurs when different analysts or models use inconsistent input data (e.g., one uses LTM EBITDA, another uses reported FY EBITDA). This lack of standardization introduces variance in the final valuation, creating doubt and slowing the deal process.

What is the benefit of TTM data in strategic valuation?

TTM (Trailing Twelve Months) data, provided by consistent APIs like the FMP Key Metrics API, smooths out seasonal effects and quarterly anomalies, providing a more stable and comparable basis for strategic valuation and operational efficiency assessments than simple quarterly figures.

How frequently should metric definitions be reviewed?

Core external metric definitions (e.g., which data source provides the authoritative 'Adjusted EBITDA') should be reviewed and ratified by the finance and strategy leadership at least annually, or following any significant regulatory change, to maintain consistency across all models and analyses.

Top 5 Defense Stocks to Watch during a Geopolitical Tension

In times of rising geopolitical tension or outright conflict, defense stocks often outperform the broader market as gove...

Circle-Coinbase Partnership in Focus as USDC Drives Revenue Surge

As Circle Internet (NYSE:CRCL) gains attention following its recent public listing, investors are increasingly scrutiniz...

LVMH Moët Hennessy Louis Vuitton (OTC:LVMUY) Financial Performance Analysis

LVMH Moët Hennessy Louis Vuitton (OTC:LVMUY) is a global leader in luxury goods, offering high-quality products across f...